Key takeaways

We use AI as a co-pilot: Audits start manually, then AI tools help map code and perform audit quality assurance. Most AI findings (~80%) are false positives and require human review.

Client confidentiality is paramount. Open-source audits may use public APIs, but closed-source code is analyzed on our internal AI server to keep data fully in-house.

We constantly improve: We benchmark models, fine-tune them on curated data, and use Socratic Q&A to enhance coverage and speed without replacing human expertise.

Introduction

Code audits are the backbone of software security. Whether reviewing smart contracts or open-source libraries, thorough auditing is the best safeguard — and your future self will thank you. At Security Research Labs, we continuously improve our audit approach: smarter, faster, and more effective.

At SRLabs, we use AI to support — not replace — our code auditors. It’s an assistive tool, not an autonomous decision-maker. Our seasoned experts lead the charge in every audit, ensuring every audit meets the highest standards. This article offers transparency on how we apply AI responsibly, keep human judgment central, and combine smart tools with deep expertise to give our clients a security edge without compromising trust.

The status quo of AI in our code audit workflow

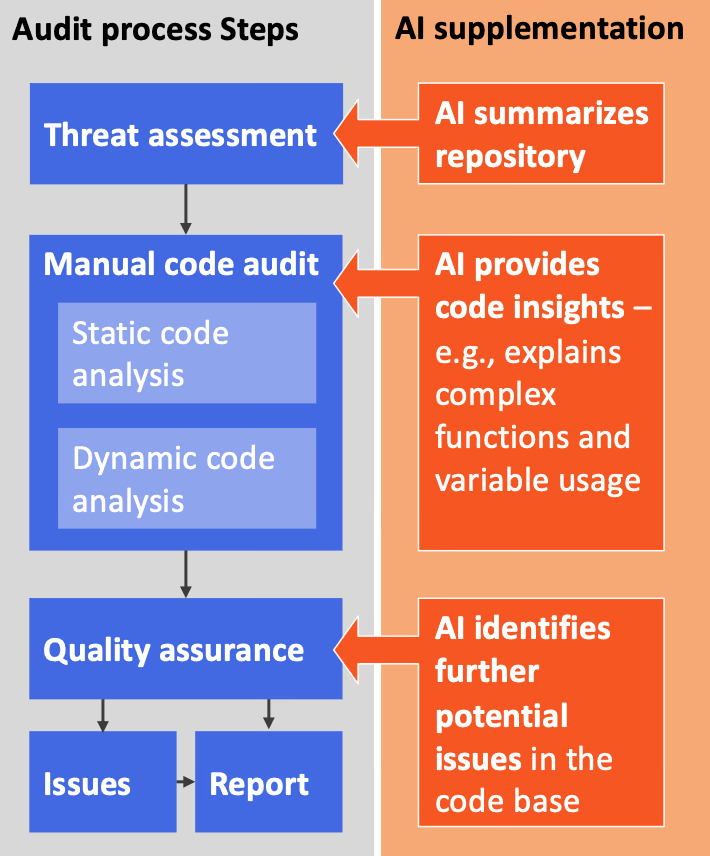

Our manual audit process is supplemented by AI tools in three ways: Summarizing the repository, providing code insights, and highlighting potential security issues.

Level 1: Repository summary

AI excels at digesting and summarizing large volumes of text both quickly and efficiently. Tools like Deepwiki take this further by summarizing entire code repositories, even providing dependency graphs. This enables us to grasp the high-level structure of a new codebase in minutes, so we can focus on the next phase: assessing threats based on the analyzed documents and code.

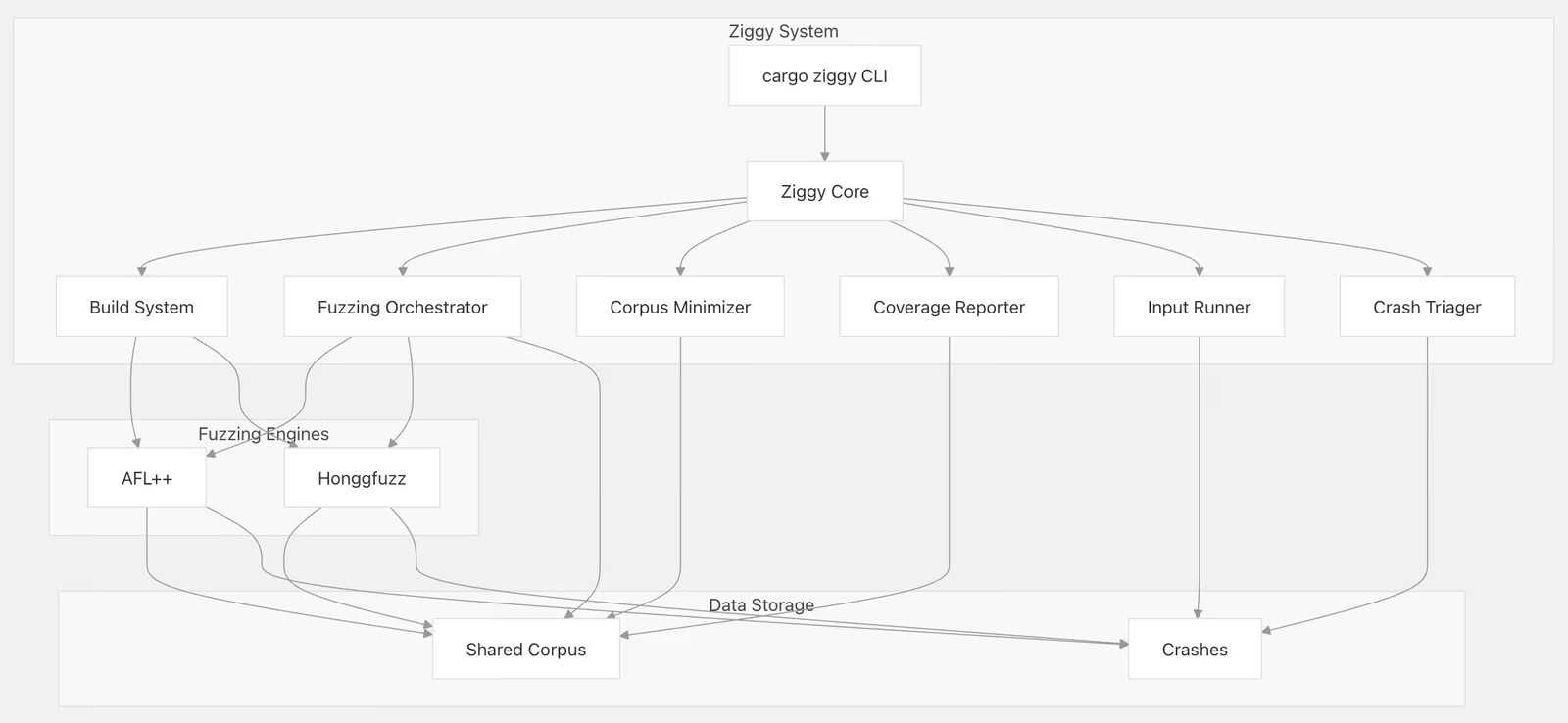

Here’s the summary that Deepwiki generated for our fuzzing orchestration tool, Ziggy:

It reveals available tool options and workflow interactions — insights that take considerable time to uncover manually.

Level 2: Code insights

Large codebases can feel like labyrinths. AI helps map these complex structures by analyzing dependencies and code flows at lightning speed. When we audit a large open-source project or a blockchain platform with tens of thousands of lines of code, we leverage AI tools to rapidly generate a structural outline, highlight entry points and critical modules, and even trace how data moves through the system. This bird’s eye view empowers our expert auditors to navigate the codebase more efficiently and ensures that no critical component is overlooked.

We don’t rely on secret tools. After evaluating many IDE plugins, we chose GitHub Copilot — with OpenRouter as the backend — so we can easily switch between models. That flexibility is critical: the “best” model on paper isn’t always the best fit for the code we audit. For instance, blockchain projects in Rust are highly specialized, and popular models like OpenAI’s o4-mini-high or Anthropic’s Claude 4.0 Sonnet often fall short.

To identify the best model for a use case, we benchmark them using real-world, complex problems from the specific ecosystem we’re auditing. We run the models against the benchmark and select the top performer. The winner often depends on the language — Rust, Go, or C++ — and the domain, whether it’s a blockchain platform or a VPN solution, for example. We regularly update our benchmarks to keep our evaluations accurate.

Level 3: Quality assurance

After our experts complete an audit, we bring in AI as a second reviewer to support our quality assurance process. The AI re-scans the audited code to flag subtle issues that might have slipped through, including edge cases or uncommon attack patterns. Think of it as an automated second pair of eyes: It doesn’t make final decisions, but it adds a safety net to catch oversights.

How effective is it? We’ve seen a handful of real issues — some low, some high severity — that would have gone unnoticed without it. However, most critical vulnerabilities identified by our auditors were missed by all AI tools entirely. AI fails when it comes to spotting complex relationships, logical flaws that span multiple functions or files, and broken security assumptions that are defined in one part of the code but violated elsewhere. But it has the advantage of knowing about thousands of publicly disclosed vulnerabilities and can recognize similar patterns — like static analysis on steroids. That’s something no human auditor can match.

In short, AI complements our process without replacing it — proving that a human-centric approach remains as effective as ever.

To get meaningful results, we rely on carefully crafted prompts and the right model for the audit target. The AI tools are far from perfect: around 80% of their findings are false positives, sounding plausible at first, but incorrect, nonetheless. Each AI-identified issue requires manual review, consuming valuable audit time. This high rate of false positives has its advantages: The auditors can never blindly trust the tool. It’s a supporter.

This is also why we only bring in AI tools for vulnerability discovery after an audit package is complete, and strictly as a QA step. Our results show this is the right call.

Confidentiality for closed-source audits

You may have noticed we only mentioned OpenRouter as our provider of choice. That’s intentional. We rely on public API providers for AI models. While we exclusively use those that promise not to store or inspect our prompts, we don’t take that as a guarantee.

The approach described above applies strictly to open-source audits, which account for about 80% of our code review work. For closed-source projects, where proprietary code must remain confidential, we’re taking extra steps.

We’re setting up an SRLabs-internal AI server to keep sensitive data within our controlled environment. Hosting today’s capable models locally is no small feat. Many require 400–900 GB of GPU VRAM, and such a setup would cost over $500,000.

We’ve identified a few strong-performing models that deliver results close to state-of-the-art, but with a manageable footprint — around 100 GB of GPU RAM. These models support closed-source audits, and are subject to SRLabs-internal fine-tuning and experimentation.

The future of AI in our code audit workflow

Research and industry trends show AI models are advancing rapidly. By 2026, a well-tuned model may be able to fully replace a junior code auditor for certain standard tasks.

It’s crucial for us to (a) continuously improve our expertise as human code auditors, and (b) refine how we harness AI to enhance the quality, speed, and value of our services.

The pitfalls of AI

Beyond the true and false positives we’ve discussed, there’s another critical concern: the human factor.

How good are your navigation skills without your phone? If you remember life before Apple or Google Maps, you could find your way to a friend’s place across town using just your brain. These days, many of us would be lost without GPS.

Overreliance on AI in technical work can dull expertise, hinder creativity, and weaken critical thinking. That’s why we’re peculiar about the exact ways of integrating AI into our workflow: to enhance, not replace, the human mind behind the audit. We want our auditing skills to be sharper than ever thanks to the support of powerful tools.

Becoming a better auditor through the Socratic method

We spent time reflecting on how AI could help us become better code auditors — not just faster ones. The answer lies in an old idea: the Socratic method.

We can encourage deeper analysis and more deliberate reasoning by having the AI ask auditors probing, Socratic-style questions about the code. Much like Socrates in Ancient Greece, it isn’t there to hand over answers — it’s there to challenge assumptions, guide thought processes and illuminate overlooked angles.

It’s a promising concept. But in practice, most AI models — even the most powerful and expensive — struggle with this role. They default to offering solutions or highlighting perceived issues, which can reinforce false positives and mislead auditors. Instead, we want an AI that guides without leading — one that helps ensure the threat landscape has been thoroughly assessed without biasing the process.

We’re researching which models can best support this Socratic approach. The shortlist is… short. But we’re experimenting with custom prompts and workflows to make this method practical in our audits.

Fine-tuning

Since 2019, we’ve been the lead auditors for the Polkadot blockchain ecosystem, uncovering over 1,000 security issues across countless codebases. We’re collecting these findings into a dataset for AI training — because if anyone’s going to teach machines how to find critical issues in the Polkadot blockchain, it’s us.

But here’s the catch: not all findings make good training data. Some issues are trivially detectable by today’s static analysis tools — think “grep-level obvious.” Others are too high-level and abstract to fit into a context window for model training. So, we filter out the extremes: anything easily found by existing tools, and anything too large or diffuse to represent meaningfully in a training set.

What’s left is a curated dataset of subtle, real-world bugs that challenge even seasoned auditors. Our next step is to fine-tune various models on this dataset to find strong specialists. An early experiment showed promising results with LLaMA 3.1 outperforming Qwen 2.5. Next, we’ll test larger and newer ones to improve the detection rate.

Stay tuned — this is where things get interesting. 🚀

Conclusion

AI already accelerates our audits, but disciplined human expertise still drives every finding. By running the best-fit models, we squeeze extra coverage from sprawling codebases. Continuous benchmarking, Socratic prompts, and targeted fine-tuning ensure the tools will sharpen our auditors rather than dull them. As models mature, SRLabs will keep raising the bar — proving that when smart humans and smart machines work side by side, security wins.